NYCJUG/2014-12-09

colormap, palette, Matlab, Emscripten version of J, client-side J for browser, automation versus skill, one-letter programming languages

Meeting Agenda for NYCJUG 20141209

1. Beginner's regatta: see "Developing Mandelbrot". Also, take a look at "A New Colormap for Matlab". 2. Show-and-tell: see "Emscripten Version of J" and "full.html" 3. Advanced topics: see "K as a Prototyping Language". 4. Learning, teaching and promoting J, et al.: see "Attack of the One-letter Programming Languages" and "More Automation: Less Capable Developers?" See "J-Class Yacht".

Beginner's regatta

Here we look at the basic code for generating the Mandelbrot set. Also, since this raises the question of what palette to use when displaying the set, we look at work being done in Matlab to settle on a default palette.

Developing Mandelbrot

The basic verb we apply iteratively to calculate points in a Mandelbrot set is this in J:

(+*:)

This adds (+) its right argument to its square (*:). The nouns to which this verb is applied are points on the complex plane. We can start with a well-positioned set of these by defining this noun:

m1k=: (512%~512-~i.1024)j.~/419%~_825+i.1024

This has a few “magic numbers” we’ll explain only generally but the most basic ones are the two instances of “1024” as these define the dimensions of the resulting set: 1024 by 1024; the first occurrence defines the number of rows, the second defines the number of columns. So,

$m1k 1024 1024

The other numbers are of two types: divisors and subtrahends. The divisors scale a sequence and the subtrahends shift the grid of complex numbers one direction or the other.

To visualize this matrix, we can use the standard “viewmat” verb: load viewmat’ viewmat m1k This comprehensive view hides the detail that viewmat provides for a matrix of complex numbers. We can see this if we sample only every 32^nd^ row and column like this: xx=. 1024$32{.1 viewmat xx#xx#"1 m1k [[File:viewmat_m1k.png height="346",width="337"]] [[File:ViewmatOfDecimated_m1k.png height="335",width="337"]]

This latter view shows us arrows representing the direction of the real and imaginary components of the complex numbers.

The colors range from dark blue for the values near the origin to magenta for the values furthest from the origin.

Iterating the Mandelbrot Function

We can most simply apply the Mandelbrot function to the complex plane iteratively using J’s “power” conjunction:

mm=. ((+*:)^:10)~ m1k

Here we apply it ten times, each time taking the result of the previous iteration as the right argument and retaining the original as the left argument. Even these few iterations give us an encouraging picture, eventually. First, however, we have to deal with this error.

viewmat mm |NaN error | ang=. *mat

Examining our result, we can easily see that the points most distant from the origin have gone to infinity.

3 3{.mm

_j_ __j_ __j__

_j_ _j_ __j_

_j__ _j_ __j_

We can exclude these extreme points based on the magnitudes of the complex numbers, using J’s monadic “|” verb, which gives the complex equivalent of absolute value by returning the distance of a complex number from the origin, e.g.

| 1j1 1j2 2j2 3j4 1.41421 2.23607 2.82843 5

So, since it seems that any complex number with a magnitude greater than say, two, is liable to grow very quickly, let’s exclude those from our view:

viewmat (2<|mm)}mm,:0

A New Colormap for MATLAB – Part 1 – Introduction

Posted by Steve Eddins, October 13, 2014

I believe it was almost four years ago that we started kicking around the idea of changing the default colormap in MATLAB. Now, with the major update of the MATLAB graphics system in R2014b, the colormap change has finally happened. Today I'd like to introduce you to parula, the new default MATLAB colormap:

showColormap('parula','bar')

(Note: I will make showColormap and other functions used below available on MATLAB Central File Exchange soon.)

I'm going spend the next several weeks writing about this change. In particular, I plan to discuss why we made this change and how we settled on parula as the new default.

To get started, I want to show you some visualizations using jet, the previous colormap, and ask you some questions about them.

Question 1: In the chart below, as you move from left to right along the line shown in yellow, how does the data change? Does it trend higher? Or lower? Or does it trend higher in some places and lower in others?

fluidJet

hold on

plot([100 160],[100 135],'y','LineWidth',3)

hold off

colormap(gca,'jet')

{{attachment:FluidJetColorMap.png||hspace="5",height="451",width="601",vspace="5",v:shapes="_x0000_i1026",border="0"}}

|| || ||

'''Question 2:''' In the filled contour plot below, which regions are high and which regions are low?

{{{filledContour15

colormap(gca,rgb2gray(jet))

The next questions relate to the three plots below (A, B, and C) showing different horizontal oscillations.

Question 3: Which horizontal oscillation (A, B, or C) has the highest amplitude?

Question 4: Which horizontal oscillation (A, B, or C) is closest to a pure sinusoid?

Question 5: In comparing plots A and C, which one starts high and goes low, and which one starts low and goes high?

subplot(2,2,1)

horizontalOscillation1

title('A')

subplot(2,2,2)

horizontalOscillation2

title('B')

subplot(2,2,3)

horizontalOscillation3

title('C')

colormap(jet)

Next time I'll answer the questions above as a way to launch into consideration of the strengths and weaknesses of jet, the previous default colormap. Then, over the next few weeks, I'll explore issues in using color for data visualization, colormap construction principles, use of the L*a*b* color space, and quantitative and qualitative comparisons of parula and jet. Toward the end, I'll even discuss how the unusual name for the new colormap came about. I've created a new blog category (colormap) to gather the posts together in a series.

If you want a preview of some of the issues I'll be discussing, take a look at the technical paper "Rainbow Color Map Critiques: An Overview and Annotated Bibliography." It was published on mathworks.com just last week.

. Published with MATLAB® R2014b

==== Comments on Matlab Palette === Interestingly, even this display-oriented essay brings up calls for better performance.

16 Comments'Oldest to Newest

Royi replied on October 14th, 2014 at 02:13 UTC:1 of 16

Steve,

. When can we expect a real overhaul of the JIT engine of MATLAB?

There has been majoe development in that field.

. Modern JIT engines (Google’s V8, LUA and Julia) offer performance which is close to compiled code.

When can we have it in MATLAB?

. We really need MATLAB to get faster, real faster.

Steve Eddins replied on October 14th, 2014 at 07:13 UTC:2 of 16

Royi—Normally, I restrict comments to be relevant to the posted topic, but I appreciate your feedback about MATLAB. As a matter of long-standing policy, we rarely say anything in public forums about future product plans.

Royi replied on October 14th, 2014 at 10:30 UTC:3 of 16

Hi Steve,

. Thank you for accepting my comment.

Well,

. I wrote it as I see big changes in MATLAB (The UI in 8.x, the Graphics in R2014b) which are all blessed and great by themselves (And very well executed). Yet I saw nothing targeted what’s most important in my opinion – Performance of the code.

I think, with today’s performance of the latest Script languages, we might use MATLAB in operational code.

. We just need the JIT engine for that.

Thank You.

Eric replied on October 16th, 2014 at 16:20 UTC:4 of 16

It was a really pleasant surprise to see the introduction of a perceptual colormap in MATLAB, and especially the guts it took to make it the default – bravo! :) Maybe future releases could have a few more options, even if they aren’t as theoretically ‘perfect’ as parula, it’d be nice to have a few different-looking perceptual colormap choices available.

Steve Eddins replied on October 16th, 2014 at 16:52 UTC:5 of 16

Eric—Thanks for the kind words! I admit that I was a bit hesitant at one point about whether to change the default. Two things happened to increase my confidence in the decision. First, a customer in an early feedback program complained that the new colormap looked “noisy.” I realized that the customer’s data was noisy, and the jet colormap was hiding that. Second, someone extremely familiar with the jet colormap gave me an eye-opening answer to Question1 in the blog post above.

And thanks also for the suggestion to expand the colormap choices.

Oliver replied on October 17th, 2014 at 04:36 UTC:6 of 16

Dear Steve,

Although Royi may be off-topic he is making an important point here in my opinion: SPEED

I wish your first R2014b related post would have been: HG2 makes image rendering 10x faster

New Colormap, graphics smoothing, Object Dot-Notation, etc. may be welcomed by many MATLAB users, but I was looking for rendering speed since I first heard rumors about HG2 a few years ago.

This code:

hFig = figure();

hAxes = axes('Parent',hFig);

rgb = zeros(1080,1920,3,'uint8');

hImg = image(rgb,'Parent',hAxes);

set(hAxes,'Visible','off');

times = zeros(1,3);

tic

for i = 1:255

tic

rgb = rgb + 1;

times(1) = times(1) + toc;

tic

set(hImg,'CData',rgb);

times(2) = times(2) + toc;

tic

drawnow

times(3) = times(3) + toc;

end

times

% Frames per second

tEnd = toc;

fprintf('Frames per second: %.0f\n',255/sum(times));

actually runs slower on R2014b compared to R2014a on my DELL T3610 with NVIDIA GTX980, unless you switch to drawnow expose, which is not what I want. At least setting CData is significantly faster but drawnow eats it all up again.

I feel that JAVA is limiting MATLAB very much in the field of video, although I understand that MATLAB image rendering may just be fast enough for most users.

No offens, Steve, but I am disappointed about HG2. Don’t get me wrong, I am a 9to5 MATLAB user since 10years and it’s the language I like to use. The GPU computing stuff that matured over the last years is very useful for image processing. In that context setting CData with gpuArrays would be highly appreciated, assuming that data is not making the roundtrip via the CPU. I am sure MATHWORKS thinks about that already. So +1 from me here.

Oliver

ps.: HG2 has basically broken font rendering using “text” on every platform, unless you turn off “FontSmoothing” (Although I am sure there are good technical reasons for that, it is unacceptable)

pps.: No need to publish this – won’t help anyone.

Steve Eddins replied on October 17th, 2014 at 07:08 UTC:7 of 16

Oliver—Thanks. I have passed your comments along to the graphics team. Can you say more about what you mean with respect to text rendering? What I see on my screen looks good.

Steve Eddins replied on October 17th, 2014 at 10:30 UTC:8 of 16

Oliver—The graphics team is already looking at your benchmark, so thanks for sending it. One follow-up question for you: Why is drawnow expose not appropriate for you?

Eric replied on October 17th, 2014 at 12:28 UTC:9 of 16

IMHO, the ‘value’ of making parula the default is worth the user ‘pain’ induced by the change, if it prevents a single instance of false data interpretation in an application in the life sciences, safety-critical aerospace/automotive/space, engineering thermodynamics/fluid dynamics, etc. And given the size of the worldwide MATLAB user base, I’d say this is a pretty safe bet… On that note, what about making a parula.m colormap generator m-file available as an official TMW file exchange item, for folks to use pre-2014b? The help text could even include a suggestion on how to set it as the default colormap using startup.m,

. One question I do have – I noticed that the top end of the colormap is a shade of yellow. Is that not going to cause any issues with contrast on LCD monitors, especially projectors? That’s why I thought that yellow text (esp on a white background, such as the stock figure background) was not recommended to be used in presentations intended to be shown on projectors.

BTW:

oliver replied on October 17th, 2014 at 13:14 UTC:10 of 16

Dear Steve,

Font Rendering using “text”, not uicontrol(‘Style’,’text’), looks blurry instead of smoothed to me. I assume no sub pixel smoothing ala ClearType is done. Just start “bench” and you will immediately notice the difference between the figures. On my Retina MacBook the bar plot labels are nearly unreadable, while the table plot is crisp. On Windows with 1920×1200 it’s not that bad but still inferior to ClearType. This was not the case with R2014a.

drawnow expose is not an option because it does not handle the system events, especially the mouse click callbacks. Imagine an interactive video player that you cannot stop after start.

Oliver

Steve Eddins replied on October 17th, 2014 at 13:56 UTC:11 of 16

Eric—Thanks. I’m with you on the value of the colormap change. Parula has actually been quietly shipping with MATLAB since R2013b. I’ll look into other options.

Regarding yellow … well, the overall set of design criteria makes the whole problem highly over constrained. If we want monotonically increasing lightness to provide the appropriate perceptual cues and to assist color-impaired viewers, then the top of the colormap is going to be pretty bright and not have much contrast against white. I don’t think that will be a problem very often in practice, though, because what you’re really getting is contrast with the rest of the data, not contrast against a white background. I’m sure there will be some cases where it’s not ideal, but it’s difficult making one colormap that works in every scenario. Working well with projectors turns out to be a pretty tight constraint. Go to ColorBrewer site and check the box that says “projector friendly.” It eliminates almost everything.

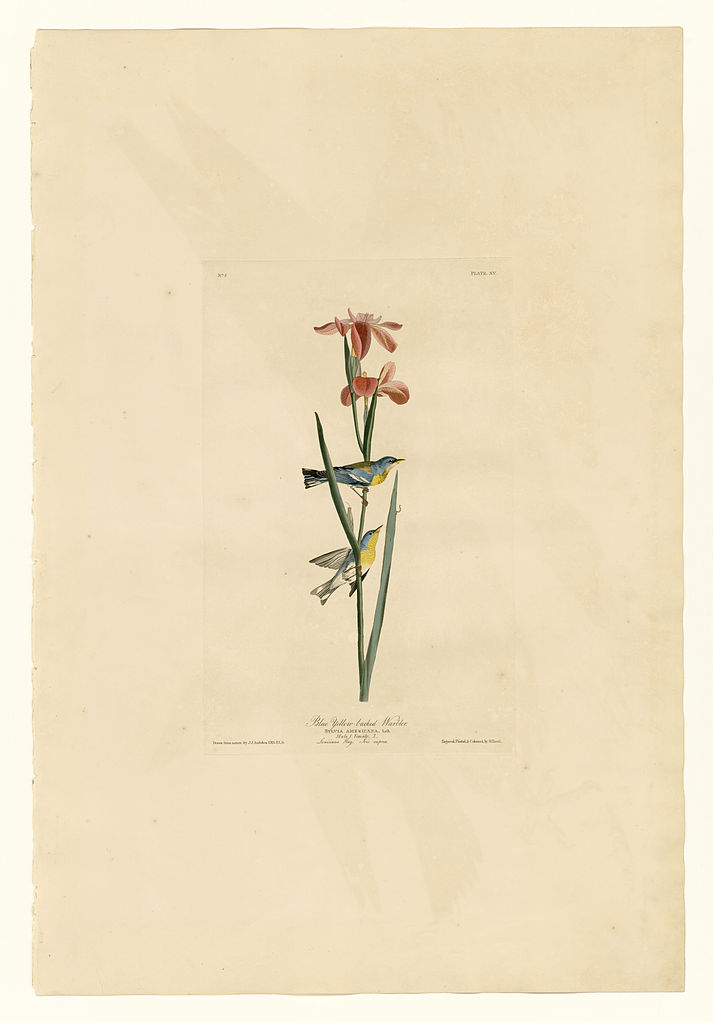

PS. Nice alternative bird image!

Steve Eddins replied on October 17th, 2014 at 13:57 UTC:12 of 16

Oliver—Thanks for the additional details. It’s helpful.

Aditya Shah replied on October 21st, 2014 at 08:11 UTC:13 of 16

Hi Steve – very interesting. I read in one of the comments about “perceptual colormap” – I hope you will be expanding upon this in future blog posts.

. On a related note, today I came across two interesting articles related to using color for maps and how rainbow color maps have drawbacks.

http://www.wired.com/2014/10/cindy-brewer-map-design/

Steve Eddins replied on October 21st, 2014 at 08:17 UTC:14 of 16

Aditya—Yes, I will be talking about various colormap issues, including the concept of perceptual uniformity. I saw the Wired article, too. Cynthia Brewer’s ColorBrewer is mentioned by everyone who says anything about using color scales for data visualization.

Eric replied on October 22nd, 2014 at 12:17 UTC:15 of 16

Steve, what do you think about the colormaps presented on this page/paper as potential future inclusions?

. http://www.sandia.gov/~kmorel/documents/ColorMaps/

Steve Eddins replied on October 22nd, 2014 at 12:38 UTC:16 of 16

Eric—People started asking me about divergent colormaps as soon as I started blogging about the new default colormap. I’m interested. We’ll see.

Show-and-tell

We discussed this exciting development in J: the availability of a client-side J interpreter implemented as a Javascript plug-in.

Emscripten Version of J

[Jchat] emscripten J ide

Joe Bogner [[Jchat emscripten J ide&In-Reply-To=<CAEtzV1Y8Xy+RD1mWmuwiwBmgaAQXBtrJtB6moEkpN4J+JOZo-w@mail.gmail.com>|joebogner at gmail.com]] / Tue Nov 25 21:53:09 UTC 2014

I found out a few weeks ago that someone had ported J to emscripten[1] . I couldn't find any contact information for the author, so I just went ahead and scraped the site to get the source.

I've posted it to github with a demo ide

http://joebo.github.io/j-emscripten/

I think there's a tremendous amount of potential here. It runs on my ipad, android and desktop. It can integrate in with javascript canvas -- see my interop example:

'drawRect' (15!:0) (10,10,10,10)

I can envision making the labs interactive and also allowing people to save and share their code. All of this running safely in the browser without an install required. We can also play with different IDE concepts. For example, I added quick picklists to Devon's Minimal Beginning J.

I went through the painful effort of trying to cut down the javascript required to run the environment. I've been able to get j-called.min.js down to 446KB minified and compressed. The full version is also available at

http://joebo.github.io/j-emscripten/full.html and weighs in at 2MB of javascript. If you get an error about something missing, try the full version.

My IDE code is still messy but posted here: https://github.com/joebo/j-emscripten/blob/master/index.html. One of the most challenging parts was figuring out how to interop with emscripten, but I was able to implement a function that lets the script get loaded and also a fake 15!:0 for interop calls.

Try it out and provide any feedback and I can update it. Alternatively, fork it and make your own version and post it here. Javascript makes J incredibly hackable and shareable.

[1] - found originally at http://tryj.tk/

---

[From http://jsfiddle.net/50Lf2m6v/1/]

<script>

var Module = {

noInitialRun: true,

print: (function() {

return function(text) {

var old = document.getElementById('Output').innerHTML;

document.getElementById('Output').innerHTML = old + '<br>' + text;

};

})()

};

</script>

<script src="http://joebo.github.io/j-emscripten/j-called.min.js"></script>

<script src="processWrapper.js"></script>

<textarea id="Input"><"0 i.5</textarea>

<input type="button" value="Run" onClick="Run()"/>

<div id="Output"></div>

Where “processWrapper.js” is this:

Module.ccall('main', null, null, null);

var process = Module.cwrap('process_wrapper', null, ['string']);

function Run() {

document.getElementById('Output').innerHTML = '';

var input = document.getElementById('Input').value;

process(input);

}

Here’s what the page using the smaller version looks like:

Pressing the “Run” button on the lower right pane gives the display shown beneath it.

The full version looks like this:

Eric Iverson [[Jchat emscripten J ide&In-Reply-To=<CAPRoPvhpTfU-+mFiiyg1h01Ga8V84okz3L7PnrgQ_LJPpTbnTw@mail.gmail.com>|eric.b.iverson at gmail.com]] / Tue Nov 25 23:02:39 UTC 2014

Couldn't help myself, and took a quick look at the emscriptem site. Amazing. The ability to compile the J engine C source to javascript is exciting! I hope others will take a serious look at this. The ultimate in portability.

---

Joe Bogner [[Jchat emscripten J ide&In-Reply-To=<CAEtzV1ZguBXEe0RdrFbyxi8Meu_Uioj+GUdiD-A9OGG-3yhN_w@mail.gmail.com>|joebogner at gmail.com]] / Tue Nov 25 23:10:27 UTC 2014

Eric,

I don't have the source code that was run through emscripten since that was done by 'f211'. I am familiar with emscipten though, so I can comment on it high level.

You can probably get much more from http://kripken.github.io/emscripten-site/

function _jtiota1(a, f, d) {

var c, b;

c = 0 == (HEAP32[f + 24 >> 2] | 0) ? 1 : HEAP32[f + 28 >> 2];

f = _jtga(a, 4, c, 1, 0);

if (0 == (f | 0)) return 0;

if (0 == (c | 0)) return f;

a = 0;

for (d = f + HEAP32[f >> 2];;)

if (a += 1, HEAP32[d >> 2] = a, (a | 0) == (c | 0)) {

b = f;

break

} else d += 4;

return b

}

var rank = function(f) { return HEAP32[16+f>>2] }

var shape = function(f) { return HEAP32[20+f>>2] }

var ravelPtr = function(f) { return HEAP32[f>>2]; }

var val = function(f,size,idx) {

return HEAP32[(ravelPtr(f) + (idx*size) +f)>>2];

}

var typei = function(f) { return HEAP32[12+f>>2] };

F1(jtiota){A z;I m,n,*v;

F1RANK(1,jtiota,0);

if(AT(w)&XNUM+RAT)R cvt(XNUM,iota(vi(w)));

RZ(w=vi(w)); n=AN(w); v=AV(w);

if(1==n){m=*v; R 0>m?apv(-m,-m-1,-1L):IX(m);}

RE(m=prod(n,v)); z=reshape(mag(w),IX(ABS(m)));

DO(n, if(0>v[i])z=irs1(z,0L,n-i,jtreverse););

R z;

}

Advanced topics

We looked at this introduction to K as a prototyping language from Dennis Shasha's course on advanced algorithms at the New York Courant Institute of Mathematical Sciences. Much of what he has to say about K in general also applies to J.

K as a Prototyping Language

Dennis Shasha

. Courant Institute of Mathematical Sciences Department of Computer Science New York University shasha@cs.nyu.edu http://cs.nyu.edu/cs/faculty/shasha/index.html

Download

Download K from the Kx Systems web site.This takes about one minute and includes a powerful database system.

Now that you have the software, please execute the examples as you go.

Lesson Plan

- start with examples

- evolve to concepts

- program them

Quick Tour of K

Basic arithmetic in K:

2+3 3*2

/ Right to left precedence:

3*2+5 / yields 21

/ Assignment

abc: 3*15 cdf: 15% 3 / division is percent sign x: 5 3 4 10

/ scalar-vector operations

3*x

Vector style operations:

/ prefix sum

+\3*x

/ element by element operations

x*x

/ cross-product of all elements

/ read this "times each right each left"

x*/:\:x

/ generating data

y: 10

/ multiplication table, each left, each right (cross-product)

y*\:/:y

show $ y

y:y*y / variable multiplication causes gui representation to be updated.

/ Try updating the gui and they will be updated.

/ tables

n: 1000 names: bob ted carol alice emp.salary: 20000 + n _draw 10000 emp.name: names[n _draw #names] emp.manager: names[n _draw #names]

show $ emp

/ modify salary to be twice the salary

emp.salary: 2*emp.salary

/ create a chart

emp.salary..c:chart

show $ emp.salary

Functions

/ present value calculation

presentval:{[amount; discount; exponent]

. +/ (amount%(discount^exponent))}

amount: 100 125 200 150 117 discountrate: 1.05 exponent: 0 0.5 1 1.5 2

presentval[amount;discountrate;exponent]

Why is K Good?

Extremely fast.

. Usually faster than the corresponding C program, e.g. present value calculation. Almost always faster than Sybase or Oracle.

Can do a lot and can do it easily (bulk data objects like tables, a passable graphical user interface, interprocess communication, web, and calculation).

Working in a single language reduces errors, increases speed yet further, and is more fun because you can concentrate on the algorithm.

. (Note: Many errors and much overhead comes from integrating different languages, e.g. C/Perl/Sybase/GUI.)

Interpreted so very fast debug cycle; no seg faults; upon error can query all variables in scope. Bad part: no declared types so get some type errors you wouldn't otherwise get.

Why is K Bad?

Small existing market: around 1000 programmers.

. (But highly paid ones.)

A challenge to learn for those used to scalar programming languages like C, Java, Pascal, etc.

Learning and Teaching J

We looked at one of the rare mainstream mentions of J in this Infoworld article. The over-heated prose starts out like this:

Attack of the One-letter Programming Languages

From D to R, these lesser-known languages tackle specific problems in ways worthy of a cult following

By Peter Wayner / InfoWorld | Nov 24, 2014

. Watch out! The coder in the next cubicle has been bitten and infected with a crazy-eyed obsession with a programming language that is not Java and goes by the mysterious name of F. The conference room has become a house of horrors, thanks to command-line zombies likely to ambush you into rewriting the entire stack in M or R or maybe even -- OMG -- K. Be very careful; your coworkers might be among them, calm on the outside but waiting for the right time and secret instructions from the mothership to trash the old code and deploy F# or J.

It continues by talking briefly about the languages D, F (a "cleaned-up" Fortran), F#, M, P, and R before saying this about J and K:

One-letter programming language: J

Once upon a time, a manager counted the lines of software coming out of the cubicle farm and determined that programmers wrote N lines of code a day. It didn't make a difference what language was used -- the company would get only N lines out of them. The manager promptly embraced APL, the tersest, most powerful language around, created by IBM for manipulating large matrices of numbers, complete with special characters representing complex functions for further terseness.

Along the way, everyone got tired of buying special keyboards from IBM, but they loved the complex functions that would slice and dice up big matrices of data with a few keystrokes. J is one of the spinoffs that offers the power of APL, but with a normal character set.

If you have vast arrays of data, you can choose one column and multiply it by another with a few characters. You can extract practically any subset with a few more characters, then operate on it as if the complex subset were a scalar. If you want to generate statistical abstracts, the creators of J have built large libraries full of statistical functions because that's what people do with big tables of data.

J on the Web: http://www.jsoftware.com/

One-letter programming language: K

J is not the only sequel to try to bring APL to a bigger audience by remapping everything to a standard keyboard. K comes from a different group, the crew that first built A, then A+. After that, they jumped inexplicably to the letter K, which offers many of the same extremely powerful constructs for crushing vectors and multidimensional arrays. You can express extremely elaborate algorithms for working with arrays in a few keystrokes.

It's worth marveling at the stark power of this one-line program to find all prime numbers less than R. It's barely even half a line:

(!R)@&{&/x!/:2_!x}'!R

In fact, it's less than half a line -- it's exactly 21 characters. You could probably pack a K program for curing cancer into a single tweet. If you're crunching large multidimensional arrays of business data into answers, you can save your fingers a lot of work with K.

K on the Web: http://kx.com/

At least one comment attempted to address an important point neglected about these latter two languages:

The unique difference of languages like J (as well as APL and A, and, to a lesser extent K) is not the short names or the terseness of their code - though this is helpful for compressing complex concepts into sufficiently small chunks to fit in working memory - but that these are notations for reasoning about computational concepts.

In the book "How Humans Learn to Think Mathematically", David Tall outlines a plausible ontogeny of the development of learning: how we advance from simple concepts - like counting on our fingers - to more complex ones - like simple addition. However, the initial, simple concepts, while helpful at first, sometimes interfere with advancing to more complex concepts: counting on your fingers works for adding very small numbers but becomes unwieldy for large numbers and impossible for slightly more advanced concepts like subtraction and negative numbers.

In much the same way, most traditional programming languages are still enmeshed in their ancestry as machine-level micro-code: their instructions are based on what was once feasible to engineer as a computer. The array-processing languages like J approach programming from another direction: how we reason with high-level computational concepts.

Both approaches have their strengths and weaknesses: traditional, compiled languages provide execution speed at the cost of extreme simplification whereas array-languages help us deal with greater complexity by providing a notation for reasoning about it. At some point, you have to stop counting on your fingers and learn mathematical notation in order to advance.

More Automation: Less Capable Developers?

New Book Argues Automation Is Making Software Developers Less Capable

Posted by [[1]] on Monday November 10, 2014 @07:01PM

. from the more-time-to-browse-the-internet-though dept.

dcblogs writes:Nicholas Carr, who stirred up the tech world with his 2003 essay, IT Doesn't Matter in the Harvard Business Review, has published a new book, The Glass Cage, Automation and Us, that looks at the impact of automation of higher-level jobs. It examines the possibility that businesses are moving too quickly to automate white collar jobs. It also argues that the software profession's push to "to ease the strain of thinking is taking a toll on their own [developer skills]." In an interview, Carr was asked if software developers are becoming less capable. He said, "I think in many cases they are. Not in all cases. We see concerns — this is the kind of tricky balancing act that we always have to engage in when we automate — and the question is: Is the automation pushing people up to higher level of skills or is it turning them into machine operators or computer operators — people who end up de-skilled by the process and have less interesting work?

. I certainly think we see it in software programming itself. If you can look to integrated development environments, other automated tools, to automate tasks that you have already mastered, and that have thus become routine to you that can free up your time, [that] frees up your mental energy to think about harder problems. On the other hand, if we use automation to simply replace hard work, and therefore prevent you from fully mastering various levels of skills, it can actually have the opposite effect. Instead of lifting you up, it can establish a ceiling above which your mastery can't go because you're simply not practicing the fundamental skills that are required as kind of a baseline to jump to the next level."

---

[From http://www.computerworld.com/article/2845383/how-automation-could-take-your-skills-and-your-job.html]

Let's talk about software developers. In the book, you write that the software profession's push to "to ease the strain of thinking is taking a toll on their own skills." If the software development tools are becoming more capable, are software developers becoming less capable?

I think in many cases they are. Not in all cases. We see concerns -- this is the kind of tricky balancing act that we always have to engage in when we automate -- and the question is: Is the automation pushing people up to higher level of skills or is it turning them into machine operators or computer operators -- people who end up de-skilled by the process and have less interesting work. I certainly think we see it in software programming itself. If you can look to integrated development environments, other automated tools, to automate tasks that you have already mastered, and that have thus become routine to you that can free up your time, [that] frees up your mental energy to think about harder problems. On the other hand, if we use automation to simply replace hard work, and therefore prevent you from fully mastering various levels of skills, it can actually have the opposite effect. Instead of lifting you up, it can establish a ceiling above which your mastery can't go because you're simply not practicing the fundamental skills that are required as kind of a baseline to jump to the next level.

What is the risk, if there is a de-skilling of software development and automation takes on too much of the task of writing code?

There are very different views on this. Not everyone agrees that we are seeing a de-skilling effect in programming itself. Other people are worried that we are beginning to automate too many of the programming tasks. I don't have enough in-depth knowledge to know to what extent de-skilling is really happening, but I think the danger is the same danger when you de-skill any expert task, any professional task, ...you cut off the unique, distinctive talents that human beings bring to these challenging tasks that computers simply can't replicate: creative thinking, conceptual thinking, critical thinking and the ability to evaluate the task as you do it, to be kind of self-critical. Often, these very, what are still very human skills, that are built on common sense, a conscious understanding of the world, intuition through experience, things that computers can't do and probably won't be able to do for long time, it's the loss of those unique human skills, I think, [that] gets in the way of progress.

What is the antidote to these pitfalls?

In some places, there may not be an antidote coming from the business world itself, because there is a conflict in many cases between the desire to maximize efficiency through automation and the desire to make sure that human skills, human talents, continue to be exercised, practiced and expanded. But I do think we're seeing at least some signs that a narrow focus on automation to gain immediate efficiency benefits may not always serve a company well in the long term. Earlier this year, Toyota Motor Co., announced that it had decided to start replacing some of its robots in it Japanese factories with human beings, with crafts people. Even though it has been out front, a kind of a pioneer of automation, and robotics and manufacturing, it has suffered some quality problems, with lots of recalls. For Toyota, quality problems aren't just bad for business, they are bad for its culture, which is built on a sense of pride in the quality that it historically has been able to maintain. Simply focusing on efficiency, and automating everything, can get in the way of quality in the long-term because you don't have the distinctive perspective of the human craft worker. It went too far, too quickly, and lost something important.

Gartner recently came out with a prediction that in approximately 10 years about one third of all the jobs that exist today will be replaced by some form of automation. That could be an over-the-top prediction or not. But when you think about the job market going forward, what kind of impact do you see automation having?

I think that prediction is probably over aggressive. It's very easy to come up with these scenarios that show massive job losses. I think what we're facing is probably a more modest, but still ongoing destruction or loss of white collar professional jobs as computers become more capable of undertaking analyses and making judgments. A very good example is in the legal field, where you have seen, and very, very quickly, language processing software take over the work of evidence discovery. You used to have lots of bright people reading through various documents to find evidence and to figure out relationships among people, and now computers can basically do all that work, so lots of paralegals, lots of junior lawyers, lose their jobs because computers can do them. I think we will continue to see that kind of replacement of professional labor with analytical software. The job market is very complex, so it's easy to become alarmist, but I do think the big challenge is probably less the total number of jobs in the economy then the distribution of those jobs. Because as soon as you are able to automate what used to be very skilled task, then you also de-skill them and, hence, you don't have to pay the people who do them as much. We will probably see a continued pressure for the polarization of the workforce and the erosion of good quality, good paying middle class jobs.

What do you want people to take away from this work?

I think we're naturally very enthusiastic about technological advances, and particularly enthusiastic about the ways that engineers and programmers and other inventors can program inanimate machines and computers to do hard things that human beings used to do. That's amazing, and I think we're right to be amazed and enthusiastic about that. But I think often our enthusiasm leads us to make assumptions that aren't in our best interest, assumptions that we should seek convenience and speed and efficiency without regard to the fact that our sense of satisfaction in life often comes from mastering hard challenges, mastering hard skills. My goal is simply to warn people.

I think we have a choice about whether we do this wisely and humanistically, or we take the road that I think we're on right now, which is to take a misanthropic view of technological progress and just say 'give computers everything they can possibly do and give human beings whatever is left over.' I think that's a recipe for diminishing the quality of life and ultimately short-circuiting progress.

Miscellaneous

J-class Yacht

A "J-Class" yacht is a single-masted racing sailboat built to the specifications of Nathanael Herreshoff's Universal Rule, The J-Class are considered the peak racers of the era when the Universal Rule determined eligibility in the Americas Cup. The J-Class is one of several classes deriving from the Universal Rule for racing boats. The rule was established in 1903 and rates double masted racers (classes A through H) and single masted racers (classes I through S). From 1914 to 1937 the rule was used to determine eligibility for the Americas Cup. [[File:J-Class_Velsheda_solent_(416500624).jpg height="348",width="512",v:shapes="_x0000_i1025",border="0"]]

In the late 1920s the trend was towards smaller boats and so agreement among American yacht clubs led to rule changes such that after 1937 the International Rule would be used for 12-metre class boats.[1]

Universal Rule formula The Universal Rule formula[2] is: [[File:UniversalRule-JClass_dbc1aa31bab2d19e02f5ebaede36c7c7.png|R=\frac {0.18 \cdot L \cdot \sqrt{S]] {\sqrt[3]{D}}|height="63",width="188",v:shapes="_x0000_i1026",border="0"}} Where:

. L is boat length (a number itself derived from a formula that includes Load Waterline Length L.W.L in feet) . S is sail area . D is displacement . R is rating

Herreshoff initially proposed an index of .2 but ratifying committees of the various yacht clubs changes this to, at various times, .8 or .85. This is, essentially, a 'fudge factor' to allow some boats designed and built prior to the adoption of the Universal Rule, to compete. [3] ||<^ width="294px" style="width:2.45in;padding:0in 5.4pt 0in 5.4pt"> sailplan of a J-Class yacht ||

sailplan of a J-Class yacht ||

The numerator contains a yacht's speed-giving elements, length and sail area, while the retarding quantity of displacement is in the denominator. Also the result will be dimensionally correct; R will be a linear unit of length (such as feet or meters). J-Class boats will have a rating of between 65 and 76 feet. This is not the overall length of the boat but a limiting factor for the variables in the equation. Designers are free to change any of the variables such as length or displacement but must reduce the other variables if the changes derive a different rating (or they must designate the craft as belonging to another class).

History and Evolution of the J Class

Prior to the adoption of the Universal Rule, the Seawanhaka Rule was used to govern the design of boats for inter club racing. Because the Seawanhaka Rule used only two variables: Load Waterline Length ( L.W.L ) and Sail Area, racing boats at the time were becoming more and more extreme. Larger and larger sails atop shorter and wider boats leading either to unwieldy, and ultimately unsafe, boats or craft that simply was not competitive. [4][5] In order to account, in some ways, for the beam and the relationship of the length over all (  ) to the load waterline length the universal rule was proposed, taking into account displacement and length, which itself was a result of a formula taking into account such

) to the load waterline length the universal rule was proposed, taking into account displacement and length, which itself was a result of a formula taking into account such

The J-Class Endeavour of 1934, shown here in 1996

The J-Class Endeavour of 1934, shown here in 1996

things as "quarter beam length". As different boats were designed and built, the notion of classes was derived to maintain groupings of competitive class.

Following Sir Thomas Lipton's near success in the 1920 America's Cup, he challenged again for the last time at age 79, in 1929. The challenge drew all the novelties developed in the previous decade on small boats to be ported onto large boats, and pitted British and American yacht design in a technological race. Between 1930 and 1937, the improvements brought to the design of sailboats were numerous and significant:

· The high-aspect bermuda rig replaces the gaff rig on large sailboats

· Solid-rod lenticular rigging for shrouds and stays

· Luff and foot grooved spars with rail and slides replacing wooden hoops

· Multiplication of spreader sets: one set previously (1914), two sets (1930), three sets (1934), four sets (1937)

· Multiplication of the number of winches: 23 winches, Enterprise (1930)

· Electrical navigational instruments borrowed from aeronautics with repeaters for windvane and anemometer, Whirlwind (1930)[6]

· "Park Avenue" boom (Enterprise, 1930) and "North Circular" boom (Rainbow, 1934) developed to trim mainsail foot[7]

· Riveted aluminium mast (4,000 lb (1,800 kg), Duralumin), Enterprise (1930)

· Genoa Jib (Rainbow, 1934) and quadrangular jib (Endeavour, 1934)[8]

· Development of nylon parachute (symmetric) spinnakers, including the World's largest at 18,000 sq ft (1,700 m2) on Endeavour II (1936)

· Duralumin wing-mast, Ranger (1937)

All these improvements may not have been possible without the context of the America's Cup and the stability offered by the Universal Rule. The competition was a bit unfair because the British challengers had to be constructed in the country of the Challenging Yacht Club (a criterion still in use today), and had to sail on their own hull to the venue of the America's Cup (a criterion no longer in use today): The design for such an undertaking required the challenging boat to be more seaworthy than the American boats, whose design was purely for speed in closed waters regattas. The yachts that remain in existence are all British, and probably log more nautical miles today than they ever did. This would not have been possible if Charles Ernest Nicholson did not obtain unlimited budgets to achieve the quality of build for these yachts.